Hi guys!

Welcome to this first post of november.

This time we will talk about one of my favourite topics: database theory.

We will talk about managing concurrent updates in ARIES based database systems like our Microsoft SQL Server but also PostgreSQL or DB2 or others are.

We have already talked about ARIES some posts ago here: Who’s who in the database world: C. Mohan (The ARIES Algorithm) and I suggest to take a look at it.

What will be discussed today is the work done by three researchers Hanuma Kodavalla, Raghavendra Thallam Kodandaramaih and Girish Mittur Venkataramanappa who works at Microsoft.

Concepts and the arguments explained here are used also in SQL Server starting from the 2019 version.

Enjoy the reading!

Introduction

As seen in the aforementioned post the ARIES protocol rely on the Write Ahead Logging (WAL) technique.

This technique guarantee two of the four ACID properties: atomicity and durability.

In particular the Durability property and so "no data loss" is guaranteed due to a crash because the WAL technique also mandates that the log record corresponding to the change is persisted to disk before the updated page.

What happens under the hood is that for each update the database systems latch the page exclusively for the entire duration of log generation and the change on the page.

This prevents other threads from modifying the page at the same time, however this cause the reducing of the concurrency and have an impact on the throughput of the system.

Because increasing the throughput of the system is a key factor today researchers have studied how to improve this aspect.

This is what we will talk about today.

From Exclusive to Shared Lock

Different methods have been studied to increase the throughput.

Researchers at Microsofts have focused on an approach that allows you to support concurrency updates for certain types of pages under a shared lock using a lock-free algorithm.

Even if this approach is suitable for various types of pages here we will only refer to PFS pages. This is because the microsoft development team has implemented the "concurrent update for PFS type pages" in the SQL Server 2019 version.

Let’s make a small digression on the PFS pages.

PFS Pages

A common feauture that ARIES database systems come with is the presence of a special type of pages used for space management.

For example, System R calls these pages free space inventory system (FSIPs), while IBM DB2 calls them Space Map Pages (SMP) and PostgreSQL call them Free Space Map (FSM).

Microsoft SQL Server calls the pages, used to track the free space in the system, with the acronym Page Free Space (FPS)

Why this type of page is important?

This type of page is important because it permit the optimization of the performance. Thank to this this of pages we can avoid to read empty pages and we can indentify pages that can be used for insert data without can the entire object.

Thanks to the FPS pages we can increase the level of concurrency.

The downside that this technique brings with it is that frequent update to these pages becomes a source of latch contention.

Sql server users in reality have been dealing with this type of problem for years.

Using a classic ruse:

Since there is one FPS page for every 64 MB chunk of data, spreading data over different data file or over different Chunk of data contributed to alleviate the Contention.

You cannot force the allocation of data into a specific chunk of data but you can easily create multiple datafile.

SQL 2019: A lock-free algorith to manage PFS pages

The lock-free algorithm presented can be used for various type of pages.

It was introduced in SQL Server 2019 to manage PFS pages because it is very effective to increase concurrency.

Let's see how it works!

PFS page updates are caused by the update to the underlying data page.

Updates to the PFS pages are done under the scope of higher order lock while doing data page updates.

Sometime the update to the data page is done first, sometime the update to PFS page is done first.

The order can change.

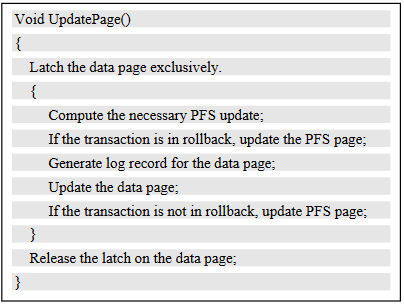

In the following image we can observe the sequence of steps that occurs when updating a page of data in SQL Server:

Firstly, you can observe that the data page is latched with an exclusive lock.

Then:

The log record for the data page is generated.

The content of the data page is updated

Now the PFS page is updated too but only if it is necessary

Finally the exclusive latch on the data page is released.

This Sequence of steps does not change with the new lock free algorithm that uses a shared latch, instead changes rather in the way the PFS page is updated.

In the following image you can observe the schema of the original algorithm on the left and the schema of the new algorithm on the right:

You will immediately notice that a shared latch is used instead of an update latch.

Yes this is foundamental!

Let’s review the lock modes in SQL server:

An Update (UP) latch is used to perform updates on a page.

Only one thread can acquire the UP latch.

This latch is compatible with a Shared (SH) Latch but not with a UP or Exclusive (EX) latch.

A Shared (SH) latch support concurrent thread reading the same page. Multiple Thread can acquire SH Latch.

The last type of latch is the Exclusive (EX) latch. Only one thread can acquire this latch and it is not compatible with UP or SH latch.

Since the new algorithm uses a shared latch (instead of a update latch) updates on PFS pages no longer need to be serialized and then run one at a time.

This is the big gain!

A Limit...

But let’s say that there is a limit: updates on PFS pages can occur simultaneously only if they update different bytes within the PFS page.

However we can say that this happens thanks to the exclusive latch on the data page.

Allowing the update of the same page PFS is part of multiple threads is definitely more complicated. An interlock operation execute of this operation as you can see from the image above.

Allowing multiple threads to update the same PFS page is definitely more complicated. An interlock operation execute of this operation this as you can see from the image above.

Other components of SQL Server get impacted to support concurrent updates, for example the Buffer Management has been modified, the dirty page manager and the log manager have been modified too.

We will not go into further details for today! You can find other info in the original document

Here

That's all for today, I wish a great week end!

~Luca

But remember:

Comments

Post a Comment