SQL Server, Today I tell you why your Query is slow. Recompilation problems

Today, as we often do, we talk about slowdowns.

To be honest, one of the questions I get asked most often is this:

"I have a Query that works fine for me and is very fast but when I try it from on customer’s server it is very slow! ...Why?"

To answer this question, let's think first of all of everything that changes from your environment to that of the customer: everything!

The data

Are you querying the same data?

SQL Server Optimizer creates the execution plan based on the "data it finds to read" (and store the execution plan inside the cache plan for performance reason.)

So suppose you have taken a copy of the customer data via a backup and therefore have the same data.

Are you already okay?

No, absolutely! What you need is to have the same

statistics and the same index fragmentation.

You will therefore understand the first reason why the tests made on your PC are not very significant.

Tests must be done on the client's server in order to test your Query on the database.

Concurrency

Another important reason is data concurrency!

If you want to try a query in an environment where only you work, you will not have concurrency problems.If you execute the same query while other users are working on the same tables that your query uses, you will see that the execution times will be quite different.

Environment

Even a different environment can lead to a different execution plan. For example, more processors or more RAM.

And then ... There are still cases in which a query performed sometimes becomes slow ..

We have talked about all these concepts many times in the various posts and what has just been written is just a small summary.

However, there are cases where a query that is executed sometimes becomes slow.

Now we will look to one of these cases that is called recompilation problem.

Recompilation problem

A little recap.For each query executed the Optimizer of SQL server create an execution plan. A way to execute your query.

There are many ways to solve your query and SQL Server has a fraction of time to find the optimal execution plan.

Not the best.

Since the creation of the execution plan of the query in an important part of the execution time, SQL Server store the execution plan in an plan cache and tend to reuse it.

If you send the same query twice, the second execution will be faster because the execution plan is already in memory (SQL Server already knows how to solve the Query)

As mentioned this is done for performance reasons but it also opens a door to sniffing problems.

In fact, sometimes the data is not evenly distributed.

For example, suppose you ask for the number of invoices per customer, there may be customers who have many invoices and customers who have few.

What happens in this situation?

Suppose you are querying the number of invoices for a customer who has few invoices.

The generated execution plan will be optimal for this query. For example the joins will be realized through nested loops.

If we now run the second query by changing the client, the execution plan will be reused.

However, it will not be optimal. In fact, if the customer has many invoices, perhaps it would have been better to implement the joins with hashe or merge joins.

All this to give an example of the fact that the sniffing problem (which we talked about a lot in previous posts) is a real problem.

Yes you can choose to recompile your query each time … but the performance would be even worse!

All this to say that recompilations are heavy operations to avoid when possible.

The problem we want to describe today is precisely this: we are faced with continuous recompilations of our Queries ( Recompilation problem).

How can this happen?This problem happen when the execution plan of your Query is invalidated.

An execution plan is invalidated when the structure of one or more of the tables indicated in the plan is changed.

The same happens if an index indicated in the execution plan is changed.

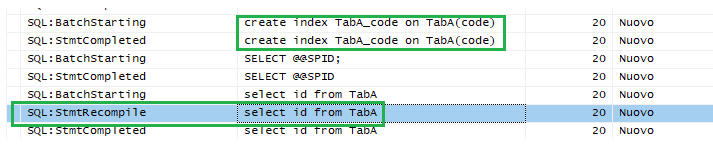

Let's see an example.

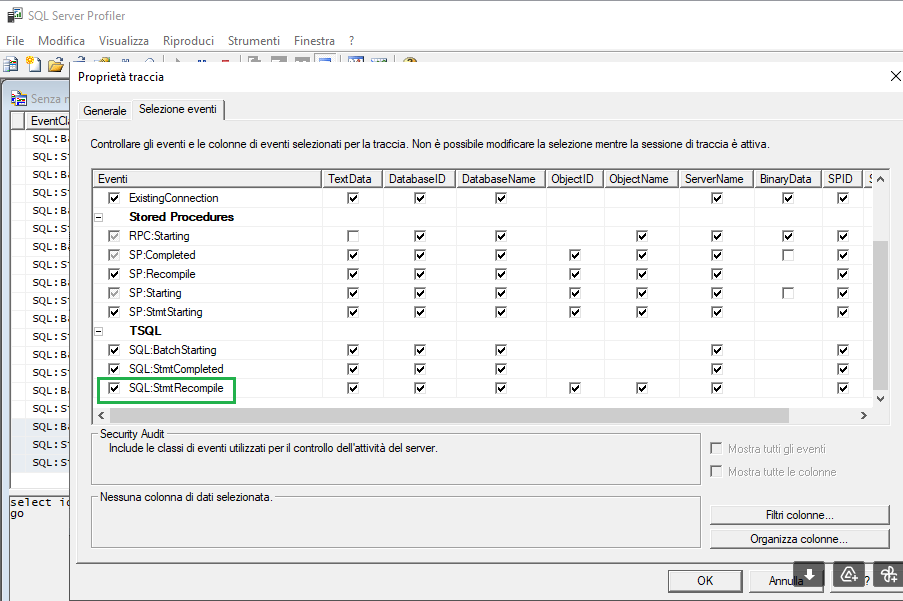

First of all we need to trace the recompilation of the Query. The simplest way of to open a profiler and check the SQL:StmtRecompile option.

Now, create a new simple table.

CREATE TABLE TabA (id INT IDENTITY(1,1) PRIMARY KEY , code VARCHAR(20))

Then execute the following select:

SELECT ID FROM TabA

From the profiler we will see the start and the end of our statement:

Now we will create an index

CREATE INDEX TabA_codice ON TabA(code)

...and then execute the SELECT again.

This time we will see also the recompile event.

By doing again the SELECT the stmtRecomile disappear.

Other test show that using these operations are instead safe:

andDbcc dbreindex(TabA)

alter index TabA_code on TabA REBUILD

While disable and enable ad index will cause recompilation.

alter index TabA_code on TabA DISABLE

alter index TabA_code on TabA REBUILD

Conclusion

When we write a program we are careful especially inside the loops we avoid adding indexes or we are careful when we do these operations.

Indexes should be created after populating the table with data.

That's all for today!

Luca

A little experiment .. only for the month of May to the first two who will donate at least $ 10 I will offer personalized support to optimize a Query .. write in case you are interested!

![]()

Previous post: SQL Server, datetime vs. datetime2

Comments

Post a Comment