SQL Server, How to Reduce the Last Page Insert Latch Contention

Welcome back guys!

Two posts ago i blogged about Choosing the Clustered Key and the last page insert latch contention problem

Two posts ago i blogged about Choosing the Clustered Key and the last page insert latch contention problem

I remember last time we broke up talking about some possibilities that you can adopt such as:

- Adopt a random clustered key value

- Reverse index technique

- Enable OPTIMIZE_FOR_SEQUENTIAL_KEY option in sql 2019

- In memory technique

- Partitioning technique

Today we make some considerations on how to reduce the contention of the last page latch (also by giving examples) by applying these methods:

- Adopt a random clustered key value (GUID)

- Reverse index technique

We will run some tests to measure both the Contention generated and the Execution time.

To generate a workload by running multiple simultaneous sessions of our query we use a microsoft tool named RML Utilities that i mentioned (at the momentin only in italian) here

We also run the test to have our baseline which is inserting data into a table with an autoincrement integer ID column with a clustered index defined on it.

Are you Ready? go!

Table with an autoincrement integer ID column

The time and contenction generated is the baseline for all our reasoning!

As just said, in this case, we want to measure latch contenction generated by inserting data in a table with an integer autoincrement ID column with a clustered index defined on it.

The table has the following structure:

CREATE TABLE TEST.DBO.PRODUCTS

(ID INT IDENTITY (1,1),

CODE VARCHAR(50),

DESCR VARCHAR(200))

CREATE CLUSTERED INDEX IDX_PRODUCTS_ID ON PRODUCTS (ID)

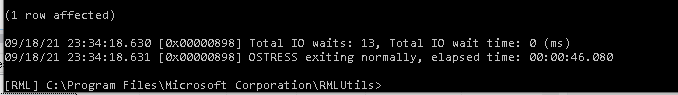

As mentioned we use the RML utilities to perform the stored procedure SP_INSART with 400 concurrent threads for 200 times.

CREATE PROCEDURE SP_INSART AS

BEGIN

DECLARE @VALID INT = DATEPART(ms,getdate())

INSERT INTO TEST.DBO.PRODUCTS(CODE,DESCR)

SELECT CODE,DESCR FROM TEMPDB.DBO.PRODUCTLIST

WHERE ID = @VALID

END

For the sake of clarity, I have added a WHERE clause so that a different row of the products table is read on each execution. Using the DATEPART function, I extract the milliseconds from the current time and then I will always get a value between 0 and 999. The productlist table must have more than 1000 rows.

DBCC FREEPROCCACHE WITH NO_INFOMSGS;

DBCC SQLPERF("sys.dm_os_wait_stats",CLEAR);

OStress.exe -iC:\Bin\ostress\Test_contenction.SQL -Usa -Pxxx -SHP-HP\SQLEXPRESS -dTEST -oC:\Bin\ostress -n400 -r200

WITH [Waits]

AS (SELECT wait_type, wait_time_ms/ 1000.0 AS [WaitS],

(wait_time_ms - signal_wait_time_ms) / 1000.0 AS [ResourceS],

signal_wait_time_ms / 1000.0 AS [SignalS],

waiting_tasks_count AS [WaitCount],

100.0 * wait_time_ms / SUM (wait_time_ms) OVER() AS [Percentage],

ROW_NUMBER() OVER(ORDER BY wait_time_ms DESC) AS [RowNum]

FROM sys.dm_os_wait_stats WITH (NOLOCK)

WHERE [wait_type] NOT IN (

N'BROKER_EVENTHANDLER', N'BROKER_RECEIVE_WAITFOR', N'BROKER_TASK_STOP',

N'BROKER_TO_FLUSH', N'BROKER_TRANSMITTER', N'CHECKPOINT_QUEUE',

N'CHKPT', N'CLR_AUTO_EVENT', N'CLR_MANUAL_EVENT', N'CLR_SEMAPHORE',

N'DBMIRROR_DBM_EVENT', N'DBMIRROR_EVENTS_QUEUE', N'DBMIRROR_WORKER_QUEUE',

N'DBMIRRORING_CMD', N'DIRTY_PAGE_POLL', N'DISPATCHER_QUEUE_SEMAPHORE',

N'EXECSYNC', N'FSAGENT', N'FT_IFTS_SCHEDULER_IDLE_WAIT', N'FT_IFTSHC_MUTEX',

N'HADR_CLUSAPI_CALL', N'HADR_FILESTREAM_IOMGR_IOCOMPLETION', N'HADR_LOGCAPTURE_WAIT',

N'HADR_NOTIFICATION_DEQUEUE', N'HADR_TIMER_TASK', N'HADR_WORK_QUEUE',

N'KSOURCE_WAKEUP', N'LAZYWRITER_SLEEP', N'LOGMGR_QUEUE', N'ONDEMAND_TASK_QUEUE',

N'PWAIT_ALL_COMPONENTS_INITIALIZED', N'QDS_PERSIST_TASK_MAIN_LOOP_SLEEP',

N'QDS_CLEANUP_STALE_QUERIES_TASK_MAIN_LOOP_SLEEP', N'REQUEST_FOR_DEADLOCK_SEARCH',

N'RESOURCE_QUEUE', N'SERVER_IDLE_CHECK', N'SLEEP_BPOOL_FLUSH', N'SLEEP_DBSTARTUP',

N'SLEEP_DCOMSTARTUP', N'SLEEP_MASTERDBREADY', N'SLEEP_MASTERMDREADY',

N'SLEEP_MASTERUPGRADED', N'SLEEP_MSDBSTARTUP', N'SLEEP_SYSTEMTASK', N'SLEEP_TASK',

N'SLEEP_TEMPDBSTARTUP', N'SNI_HTTP_ACCEPT', N'SP_SERVER_DIAGNOSTICS_SLEEP',

N'SQLTRACE_BUFFER_FLUSH', N'SQLTRACE_INCREMENTAL_FLUSH_SLEEP', N'SQLTRACE_WAIT_ENTRIES',

N'WAIT_FOR_RESULTS', N'WAITFOR', N'WAITFOR_TASKSHUTDOWN', N'WAIT_XTP_HOST_WAIT',

N'WAIT_XTP_OFFLINE_CKPT_NEW_LOG', N'WAIT_XTP_CKPT_CLOSE', N'XE_DISPATCHER_JOIN',

N'XE_DISPATCHER_WAIT', N'XE_TIMER_EVENT')

AND waiting_tasks_count > 0)

SELECT

MAX (W1.wait_type) AS [WaitType],

CAST (MAX (W1.WaitS) AS DECIMAL (16,2)) AS [Wait_Sec],

CAST (MAX (W1.ResourceS) AS DECIMAL (16,2)) AS [Resource_Sec],

CAST (MAX (W1.SignalS) AS DECIMAL (16,2)) AS [Signal_Sec],

MAX (W1.WaitCount) AS [Wait Count],

CAST (MAX (W1.Percentage) AS DECIMAL (5,2)) AS [Wait Percentage],

CAST ((MAX (W1.WaitS) / MAX (W1.WaitCount)) AS DECIMAL (16,4)) AS [AvgWait_Sec],

CAST ((MAX (W1.ResourceS) / MAX (W1.WaitCount)) AS DECIMAL (16,4)) AS [AvgRes_Sec],

CAST ((MAX (W1.SignalS) / MAX (W1.WaitCount)) AS DECIMAL (16,4)) AS [AvgSig_Sec]

FROM Waits AS W1

INNER JOIN Waits AS W2

ON W2.RowNum <= W1.RowNum

GROUP BY W1.RowNum

HAVING SUM (W2.Percentage) - MAX (W1.Percentage) < 99 -- percentage threshold

OPTION (RECOMPILE);

GO

We have approximately a 16% of LATCH and 9% of PAGELATCH

Insert using a random clustered key value

For the second test we will insert data in a table where ID is of UniqueIdentifier datatype.

Doing so we should avoid the last page contention because at each insert we will insert data into a different data page.

This is the table:

CREATE TABLE TEST.DBO.PRODUCTS_HASH

(ID uniqueidentifier,

CODE VARCHAR(50),

DESCR VARCHAR(200))

This is the stored procedure that the file test_contenction_hash will exec.

CREATE PROCEDURE SP_INSART_HASH AS

BEGIN

DECLARE @VALID INT = DATEPART(ms,getdate())

INSERT INTO TEST.DBO.PRODUCTS_HASH(ID,CODE,DESCR)

SELECT NEWID(), CODE,DESCR FROM TEMPDB.DBO.PRODUCTLIST

WHERE ID = @VALID

END

As for the previous test we empty the cache and clear the statistics of the wait types:

DBCC FREEPROCCACHE WITH NO_INFOMSGS;

DBCC SQLPERF("sys.dm_os_wait_stats",CLEAR);

Now execute the OStress with this parameters:

OStress.exe -iC:\Bin\ostress\Test_contenction_hash.SQL -Usa -Pxxx -SHP-HP\SQLEXPRESS -dTEST -oC:\Bin\ostress -n400 -r200Just after the OStress command has finished execute the DMV

These are the results:

We have approximately a 3,6% of LATCH (instead of 16%) and 0,53% of PAGELATCH (instead of 9%)

The execution time was of 41.507 seconds instead of 46.080 seconds. Equal to 1920 rows inserted per second.

Is this case we can say that effectively we have less LATCH and PAGELATCH. Speed is increased by a +14%. Not bad!

Of course, the fact remains that the Cluster index on the uniqueidentifiers ID column has become too large.The size of the uniqueidentifiers is 16 bytes instead of 4 bytes of an integer.

The Reverse index technique

We need to generate programmatically an unique field distribuited evenly.

The idea is taking a number and inverting the order of the bits of which it is composed.

For example with an 8-bit number:

Number Binary value Value with inverted bits Value obtained

1 0000001 10000000 128

2 0000010 01000000 64

3 0000011 11000000 172

4 0000100 00100000 32

5 0000101 10100000 160

The function that reverses the order is this:

CREATE FUNCTION INVERTI_BIT (@Input int)

RETURNS INT

AS

BEGIN

DECLARE @WorkValue int=@Input

DECLARE @Result int=0;

DECLARE @Counter int=0;

WHILE @Counter < 31

BEGIN

SET @Result=@Result*2

IF (@WorkValue&1)=1

BEGIN

SET @Result=@Result+1

SET @WorkValue=@WorkValue-1

END

SET @WorkValue=@WorkValue/2

SET @Counter=@Counter+1

END

RETURN @Result

END

ALTER PROCEDURE SP_INSART_INVERTED AS BEGIN DECLARE @VALID INT = DATEPART(ms,getdate())INSERT INTO TEST.DBO.PRODUCTS_REVERSE(ID,CODE,DESCR)SELECT dbo.INVERTI_BIT(ID),CODE,DESCR FROM TEMPDB.DBO.PRODUCTLISTWHERE ID = @VALID END

The table where data will be inserted is so defined:

CREATE TABLE TEST.DBO.PRODUCTS_REVERSE

(ID INT,

CODE VARCHAR(50),

DESCR VARCHAR(200))

CREATE CLUSTERED INDEX IDX_PRODUCTS_ID ON PRODUCTS_REVERSE (ID)

Let's summarize

What we observed from the tests is that actually inserting a progressive ID generates the last page contention.

In case we have this problem we can alleviate it by generating not a progressive but a random key value.

As the latch contenction decreases, it is not certain that the insertion time will decrease in the same way.

That's all for today! but remember to stay tuned!

The next time we will speak about another way to decrease the Last Page Latch Contenction.

I am sure it will be very interesting!

Luca Biondi @ SQLServerPerformance blog 2021!

Next post:

Previous post: SQL Server, Difference between a seek and a scan operation and the Latch coupling

Comments

Post a Comment